If you are worried about what happens when AI agents start pressing the “buy” button for your customers, you are not alone. I hear the same question from founders again and again. How do we enable secure agent transactions without losing visibility or control.

In this article, I will walk through the trust stack for agent commerce, from transparency and OAuth 2.0 agent auth to payments, explainability, and consumer trust signals you can ship today.

Summary: How To Secure Agent Transactions

Secure agent transactions are not just a security feature. They are a growth channel filter. If your agent stack is opaque, slow, or fragile, your best customers will route around it.

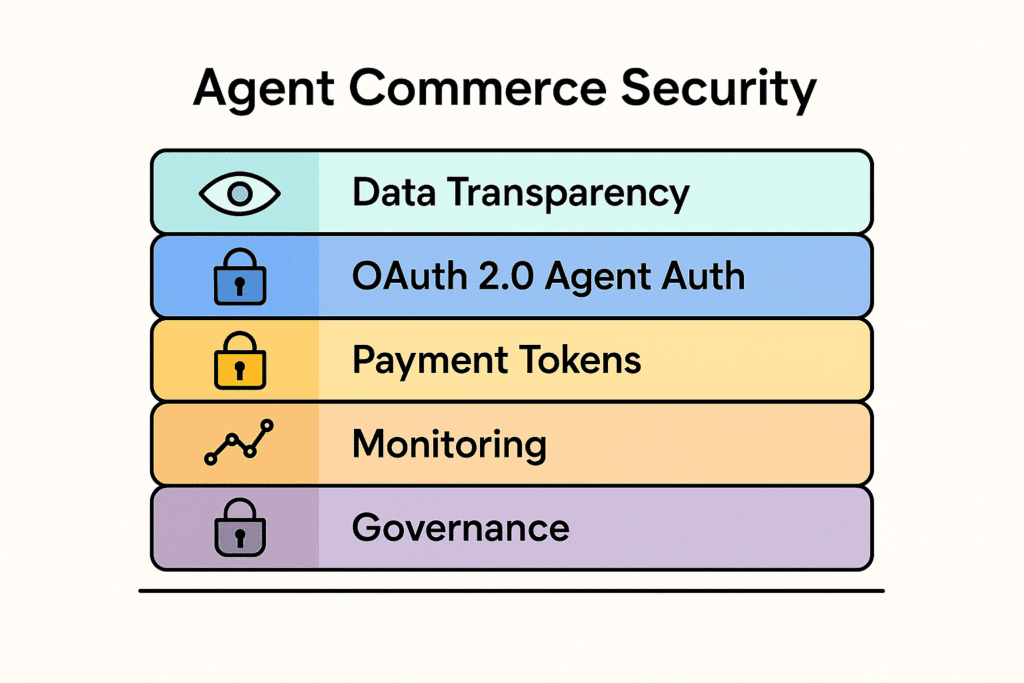

At a high level, you need five layers working together.

- Transparent data flows that customers can understand and audit.

- Strong identity with OAuth 2.0 based delegation for agents, not only humans.

- Payment rails that support tokenized, scoped, revocable spend.

- Explainable decisions and clear user controls around consent.

- Trust signals in the UI and in your policies that match real safeguards behind the scenes.

In practice this means combining modern identity standards, delegated payment protocols like OpenAI and Stripe’s Agentic Commerce Protocol, and governance that respects GDPR and the EU AI Act.

When you align transparency in agent commerce with concrete protections, you get a system where customers feel safe letting agents act on their behalf, and you avoid the worst edge cases before regulators force your hand.

Data Transparency In Agent Commerce

Most teams start by asking how to make agents smarter. The better question is how to make them more legible. If your data flows look like a black box, no trust framework will save you for long.

In agent commerce, transparency has three audiences. The customer, your internal teams, and regulators. Each one needs a slightly different view of the same reality.

Here is a simple way to structure it.

| Layer | What the agent sees | What the business controls |

|---|---|---|

| Customer data | Scopes, attributes, consent status | Collection, retention, lawful basis |

| Context data | Catalog, inventory, pricing, content | Business rules, ranking logic, overrides |

| Risk signals | Abstract risk flags, not raw logs | Models, thresholds, incident response playbooks |

If you design your events, logs, and dashboards with this table in mind, transparency becomes a product decision, not just a legal line in the footer.

GDPR already pushes you toward data minimization and clear consent. The difference in agent commerce is volume and autonomy. Agents will touch more data, more often, and in more combinations. Privacy by design stops being a slogan and becomes an operational constraint.

In my own work with growth teams, the biggest shift has been treating “explain what happened” as a core requirement. Not only for attribution, but for compliance and trust. According to guidance on GDPR and AI-driven services from the European Commission, you are expected to provide meaningful information about automated decision-making, not vague statements about algorithms. If your logs, consent flows, and customer dashboards cannot support that story, the rest of your stack is already behind.

For a strategic overview of how this plugs into your e-commerce roadmap, I map out the bigger picture in The Complete Guide to B2A Commerce [Business to Agents]: Preparing Your Ecom Brand for the AI-First Era.

Authentication, OAuth 2.0 And Identity For Agents

Once the data layer is visible, the next question is simple. Who is this agent, and what can it actually do? Most current systems still treat agents as glorified API clients. That will not scale.

A robust identity stack for agents has three pieces.

- A stable cryptographic identity for each agent.

- Delegation from a real legal entity or user account.

- Session level authorization using OAuth 2.0 agent auth patterns.

Modern work on decentralized identifiers and verifiable credentials is relevant here. Think of each agent as holding a wallet of signed proofs. For example, a proof that this agent is allowed to place orders up to a certain limit for a specific merchant, issued by your platform. That proof is separate from the user’s personal identity, but still linked to an accountable party.

On top of that, OAuth 2.0 gives you a familiar delegation pattern. You can design scopes such as agent:read_cart, agent:place_order, or agent:view_loyalty. Tokens are short lived. Refresh tokens are guarded. Every permission is explicit and revocable.

A practical pattern I recommend is a two level flow.

- The human grants the agent access through an OAuth screen, with clear scopes and spending caps.

- The agent later uses those tokens to act, without re involving the user for each action.

This mirrors how many integrations already work, but with much tighter constraints and monitoring.

Trusted Execution Environments and remote attestation then close the loop. Hardware backed proofs can confirm that the code running under a given agent ID has not been tampered with. Intel’s work on TEE attestation and several early ERC 8004 implementations point in that direction. You should not ship this on day one for every side project, but you should design your identity and OAuth model so you can layer stronger attestation in later without rewiring everything.

I unpack some of the operational tradeoffs around identity and permissions in my article on payments and transactions in agent commerce, since identity and payment risk are tightly coupled.

Payment Security And Secure Agent Transactions

If identity is the skeleton, payments are the bloodstream. This is where things break in the real world. The difference between a neat demo and a nightmare chargeback spreadsheet is payment governance.

Secure agent transactions require two qualities simultaneously. Flexibility for the agent to act in real time, and tight control around scope, amount, and counterparties.

The OpenAI and Stripe Agentic Commerce Protocol is a good reference model. In their joint announcement, they describe a system where an agent never touches the raw card number. Instead, it receives a short-lived token scoped to a specific merchant and amount. The merchant backend redeems that token as if it were a normal card, and Stripe’s fraud models travel with the token.

In practical terms, your payment layer should answer six questions for every agent initiated transaction.

| Question | Example control |

|---|---|

| Who is the principal | User account ID or business entity |

| Who is the agent | Agent ID plus version or policy set |

| What is the maximum amount | Currency, cap, and duration |

| Which merchant is allowed | Merchant IDs or categories |

| How is risk evaluated | Realtime risk score, flags, 3DS requirement |

| How can this be revoked immediately | Mandate dashboards, API revocation, alerts |

Mandate style permissions, similar to SEPA direct debit agreements, fit nicely here. The user does a stronger authentication once, agrees to a clear mandate, and then the agent operates within that narrow lane. Regulations such as PSD3 and the upcoming Payment Services Regulation are actively reshaping how liability is allocated when a delegated party performs strong customer authentication. The key point for you as an operator is simple. The party that authenticates usually owns the risk.

Visa’s Trusted Agent Protocol follows the same logic from a card network angle. It uses signed HTTP messages and known keys to prove that a given agent is trusted by the network, without forcing merchants to redesign their checkout flow.

When you architect your own flows, design them so you could plug into ACP, TAP, or similar schemes later. It is safer and cheaper than inventing a bespoke model that no bank wants to underwrite. I dig into concrete payment patterns and risk tradeoffs in more depth in my guide on managing payments and agent driven transactions.

Explainability, Consent And Visible Trust Signals

Most users will never read your OAuth scopes in detail. They will not study PSD3 liability timelines. They will rely on simple signals. Can I see what this agent did. Can I change what it is allowed to do. Can I stop it if it feels wrong.

That means explainability and consent are not abstract compliance topics. They are direct UX levers.

A practical pattern I like to use is to treat your agent interface like a banking app timeline. Every significant action appears in a feed with four fields visible.

- What the agent did, in plain language.

- Why it did it, with the key rule or goal highlighted.

- What data it used, in human readable categories.

- How to undo or adjust the permission that allowed it.

You do not need to expose your entire model reasoning. You do need to expose enough to let a normal person say “yes, that seems right” or “no, that is not acceptable”. The EU AI Act leans in this direction by requiring meaningful information about logic, significance, and potential consequences for high risk systems.

Consent management then becomes an ongoing relationship, not a one time cookie popup. Think dashboards where a customer can see all active mandates, scopes, agents, and their recent actions in one place, and adjust them in real time.

You can think about this like SEO for agents. Clear metadata, structured events, and readable explanations help both the human and the regulator “crawl” what your agents are doing.

If you ignore this layer, you will still have agents. You will just also have higher churn, more support tickets, and less defensible positions when something goes wrong. I cover the cultural and operational traps in my article on challenges and considerations in agent commerce, which pairs well with the more technical view here.

Threat Models, Zero Trust And Ongoing Governance

Here is the uncomfortable bit. Even if you nail identity, OAuth 2.0 agent auth, payments, and consent, your agents will still be attacked. They will be phished, poisoned, and abused. Threat modeling is no longer a niche security exercise. It is required product work.

Zero trust is a helpful starting point. Do not assume that an authenticated agent is always safe. Instead, assume every request could be compromised, and keep verifying.

From my work with teams rolling out early agent pilots, a handful of threat categories show up again and again.

| Threat type | Example scenario | Mitigation pattern |

|---|---|---|

| Prompt injection | Malicious page tells agent to ignore rules | Strong system prompts, content filters, sandbox |

| Data exfiltration | Agent leaks PII to third party API | Egress controls, DLP, strict scopes |

| Spend abuse | Agent exceeds expected order frequency | Behavioral risk scoring, caps, alerts |

| Policy drift | Agent “forgets” business constraints | Policy engines, regression tests, TEEs |

Continuous monitoring is what ties this together in production. You want behavioral baselines for each agent, per user and per merchant. If an agent suddenly starts making high value orders at 3 a.m. from a new region, or calling unusual APIs, your system should treat that as a security incident, not a fun experiment.

Governance is the human side of this equation. Clear ownership for policies, documented change management, and an incident response process that includes agents explicitly. Many of the ideas from ISO 42001 on AI management systems map well here. They give you a way to formalize risk assessment, controls, and continuous improvement for your agent stack.

You will also run into complex edge cases around multi agent liability and emerging regulation. That is one reason I maintain a separate write up on the bigger challenges and considerations of agent commerce. It goes into scenarios where traditional contracts, insurance, and tort law intersect with these systems.

Q&A: Secure Agent Transactions In Practice

Q: What makes a transaction “secure” when an AI agent initiates it.

A: A secure agent transaction combines strong identity for the agent, scoped OAuth 2.0 permissions, tokenized payments, and continuous monitoring. You always know which principal the agent acts for, what spend and data scope it has, and how to revoke or challenge actions quickly if something looks off.

Q: How is OAuth 2.0 used differently for agents than for regular users.

A: For agents, OAuth 2.0 becomes a way to grant narrow, revocable powers to software, not just access to an API on behalf of a user. You design scopes around concrete actions and limits, such as order placement caps or allowed merchants, and you expect those tokens to be monitored and rotated more aggressively.

Q: Do small ecommerce brands really need this level of complexity.

A: Not on day one. But if you plan to let agents touch sensitive data or actual money, you need a roadmap. Start with clean logs, simple scopes, and transparent dashboards. Then layer in stronger attestation, delegated payment standards, and formal governance as volume grows.

Conclusion: Build Trust Before Volume

Agent commerce will not wait for your security roadmap to feel complete. Customers will start relying on agents that save them time and reduce friction, whether you are ready or not. Your job as a marketer or operator is to make sure those agents sit on top of a trust stack, not a pile of shortcuts.

Start with visibility. Clean, structured logs and timelines that a normal person can read. Then tighten identity with OAuth 2.0 based delegation and clear mandates. From there, plug into modern payment patterns and risk engines rather than building your own fragile version. I cover these mechanics side by side in my breakdown of payments and agent transactions.

As volume grows, the soft parts will matter more. Governance, culture, incident response, and the way your team talks about agent risk. If you want a deeper look at those edges, my article on challenges and considerations in agent commerce is a good next stop.

The brands that win in this phase will not simply have the most powerful agents. They will have the most trusted ones. That trust is not magic. It is the compound result of a thousand small, intentional design decisions that start right now.

Quick Knowledge Check

Question 1: What is the primary goal of designing secure agent transactions?

Question 2: How should OAuth 2.0 be used when authorizing agents?